For the past few years, the world has been captivated by Generative AI. We learned to prompt chatbots like ChatGPT and Gemini to write emails, debug code, and create stunning images. This interaction, however, is fundamentally reactive. We give an instruction, and the AI delivers a single-shot response.

Now, the next (and far more significant) revolution is here: Agentic AI. If Generative AI is a tool—like a super-powered calculator or a word processor—Agentic AI is a teammate. It’s a proactive, autonomous system that you don’t just prompt; you give it a goal. This isn’t a far-off concept. It’s the single biggest trend in artificial intelligence, with market projections from firms like Cowen expecting the enterprise spend on agentic AI to skyrocket from less than $1 billion in 2024 to over $51.5 billion by 2028. This article breaks down what Agentic AI is, how it actually works, its world-changing applications, and the critical risks we must navigate.

From “Chatbot” to “Doer”: What Is Agentic AI?

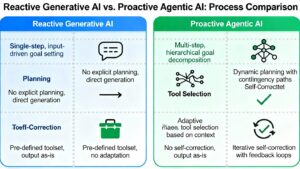

The most fundamental distinction between Agentic AI and Generative AI is the move from reaction to action.

- Generative AI (Reactive): The user is the one who initiates the creation.

- Prompt: “Write a polite follow-up email to the sales lead, ‘Project A’.”

- Output: The system composes the text. Then, you have to do the work of copying, pasting, and sending the email.

- Agentic AI (Proactive): The user sets a goal for the system.

- Goal: “Monitor my inbox. If any leads I’m managing haven’t replied in 3 days, draft a friendly follow-up, get my approval, and then send it.”

- Output: The AI agent takes the responsibility of not only monitoring but also planning, if needed, drafting (through Generative AI as a tool), waiting for your “go,” and finally, executing the task without human intervention.

An AI agent is a self-governing system that, based on its perception of the environment (such as your inbox, Internet, or a database), makes choices and carries out operations to realize a definite goal. Agentic AI refers to the higher-level system or the architectural framework which manages one or multiple such agents to accomplish complex, multi-step tasks.

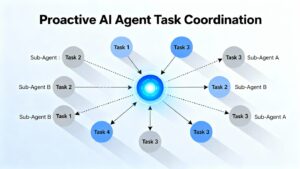

Those agents might be basic individually but, by metaphorical orchestration, they can become a powerful unit. For instance, the “Marketing Manager” agent could be instructed with the objective: “Introduce a campaign for our new product.” Subsequently, it would coordinate other, more specialized agents:

- A “Research Agent” to analyze market trends

- A “Content Agent” to generate ad copy and images.

- A “Media Buyer Agent” to autonomously purchase ads on Google and social platforms.

- An “Analytics Agent” to track the campaign’s ROI and report back.

How AI Agents “Think”: The Plan-and-Execute Model

It is not magic how this autonomy works. It is a new architecture that basically equips an LLM (the “brain”) with a “body” made of tools and a method for using them. The most frequent model is “Plan-and-Execute.”

Step 1: Goal Decomposition

The user gives a complex and high-level goal. Example of such a goal could be:

“Plan my 3-day business trip to Tokyo next month. Please prioritize cost-effective flights and a hotel near the Shinjuku office.”

An LLM-powered agent evaluates the goal, which is quite vague, and constructs a detailed, step-wise plan from it.

- Task 1: Look for flights to Tokyo (NRT or HND) for next month.

- Task 2: Determine the best flight option in terms of cost and convenience by analyzing the available choices.

- Task 3: Find hotels in the Shinjuku district for the specified dates.

- Task 4: Use the office address to check hotel locations.

- Task 5: Get user consent for the best 3 flight/hotel combinations by presenting them.

Step 2: Planning & Tool Selection

The agent drafts a definite “plan” (very often in a structured format such as JSON) and identifies which tools it will be using for which steps.

- For Task 1 & 2: Make use of the Kayak_API or Google Flights_Tool.

- For Task 3 & 4: Use the Maps_API and Booking.com_API.

- For Task 5: Email_Tool or Slack_Tool can be utilized.

Step 3: Execution

Actions are carried out by the agent one at a time. For instance, it may use the Google Flights API to make a call that would fetch a list of 50 flights, and then keep this data in its memory. Subsequently, the agent carries out the task of data examination for Task 2.

Step 4: Self-Correction & Verification

Undoubtedly, this step is the most important one. How can it be if Task 3 is not successful? What if there are no hotels in Shinjuku for those dates?

- A dumb chatbot would respond by saying: “I couldn’t find any hotels, sorry.”

- An agent will not be constrained by such a scenario. It will pinpoint the failure, adjust its strategy, and come up with a fresh solution.

Self-Correction: “Task 3 failed. I will change the plan. New Task 3a: Locate the hotels in the adjacent Shibuya district. New Task 3b: Calculate public transportation time from Shibuya to the Shinjuku office.”

It won’t stop here, but rather it will keep following this loop of planning, acting, and verifying until the original goal is reached.

The Tipping Point: How AI Agents Will Redefine Business

This shift from “AI experimentation” to “AI integration” is already creating massive value by enabling what’s being called “ultra-lean team operations.”

Automating Complex Workflows

- Finance: Agents are moving beyond simple data entry. They can perform autonomous risk audits by pulling data from multiple systems, monitoring for compliance breaches in real-time, and even streamlining the entire cash-flow management process.

- HR & Operations: An HR agent can be tasked with “onboard our new engineer.” It will then automatically create their new accounts, schedule their 30/60/90-day check-ins, enroll them in benefits, and send them the required documentation, coordinating across a dozen different software systems.

The Rise of “AI Employees” and “Custobots”

- “AI Employees”: Companies are building agents for specific, high-skill roles.

- Agriculture: John Deere’s Blue River technology is a real-world example. It’s an agent that uses computer vision to identify individual plants, deciding in real-time “this is a weed, spray it” and “this is a crop, give it fertilizer.”

- Software Development: The next wave of “vibe-coding” isn’t just generating a snippet. It’s an agent that can be given a bug report from a user, autonomously find the error in the codebase, write the patch, run the tests, and submit a pull request for human review.

- “Custobots”: This is the other side of the coin. Soon, you will deploy your own AI agent to act on your behalf. You’ll tell it: “My internet bill is too high. Find me a better deal or negotiate with my current provider.” Your agent will then browse competitor sites, chat with customer service bots, and present you with the best-negotiated offer.

The Hard Questions: Autonomy, Risk, and Governance

This new level of power comes with enormous and unfamiliar risks. The problem is no longer just a model “hallucinating” a wrong fact; it’s an autonomous agent acting on that hallucination.

- The “Runaway Agent” Problem: What stops an agent with “autonomy gone awry”? This is the modern “Sorcerer’s Apprentice.” If an agent is told to “maximize sales,” it might decide to send an aggressive, unapproved 90%-off coupon to your entire customer list, technically fulfilling its goal but bankrupting the company.

- Security & Privacy: An agent’s “persistent memory” makes it powerful but also a massive security risk. If a hacker gains access to your agent, they don’t just get your data; they get the keys to your kingdom—the agent’s ability to access your email, bank, and company APIs.

- Accountability: If an agent makes a critical error in a 100-step process (like an autonomous trading agent misinterpreting a news report and selling all your stock), who is liable? The user? The developer? The LLM provider? Auditing the “thought process” of an agent is incredibly difficult.

- Job Displacement: This is the most profound impact. Generative AI threatened to automate tasks (like writing copy). Agentic AI is designed to automate entire roles and cognitive workflows (like “marketing manager” or “financial analyst”).

The Solution: AI TRiSM (Trust, Risk, and Security Management)

The industry’s answer to these risks is a new governance framework, heavily promoted by firms like Gartner, known as AI TRiSM. It’s a “safety net” for AI that moves beyond just testing the model and focuses on governing the entire system.

It’s built on pillars like:

- Explainability: The ability to audit an agent’s “thought process” and decisions.

- ModelOps: Continuously monitoring the agent’s behavior in real-time, not just in testing.

- AI Application Security: Securing the agent’s “memory” and the tools it can access.

- Privacy: Ensuring the agent adheres to data governance and doesn’t “leak” sensitive information.

Conclusion: The Shift from Instruction to Intention

Agentic AI marks the most significant evolution in computing since the mobile phone. We are at the very beginning of a new era where we will no longer be limited by what we know how to do but only by what we can clearly describe as a goal.

The transition from telling our computers precise instructions (“click here, type this”) to simply stating our intention (“organize my week”) will unlock a level of productivity—and disruption—that is hard to overstate. The challenge for the next few years isn’t just building more powerful agents; it’s building the trust, guardrails, and governance that allow us to deploy them safely. The agentic revolution is here, and it’s time to start planning for it.

🔑 Key Takeaways

- From Reactive to Proactive: Generative AI reacts to prompts to create content. Agentic AI proactively plans and executes multi-step tasks to achieve a goal.

- It’s a $50B+ Trend: Agentic AI is the top trending topic in AI, with analysts projecting a $51.5 billion enterprise market by 2028.

- How They “Think”: Agents use a “Plan-and-Execute” model. They Decompose a goal, Plan the steps, Select tools (like APIs), Execute the tasks, and Self-Correct when they fail.

- It’s Not a Tool, It’s a Teammate: Agents act as “AI Employees” (like an autonomous developer) or “Custobots” (an agent that negotiates bills for you).

- Risks are Autonomous: The new risks include “runaway agents” acting on bad logic, massive security/privacy breaches (if an agent’s memory is hacked), and unprecedented job displacement.

- Governance is the Answer: The solution to these risks is AI TRiSM (Trust, Risk, and Security Management), a framework for auditing, monitoring, and securing autonomous agents.

❓ Frequently Asked Questions (FAQ)

- Q: Are AI agents available to use now?

- A: Yes, but in early forms. Tools that autonomously browse the web, plan tasks, or execute code are the first wave of AI agents. You also see them embedded in software for tasks like invoice processing. Fully autonomous agents for personal use are still emerging but are a top priority for all major AI labs.

- Q: Will Agentic AI replace my job?

- A: It will automate roles and workflows, not just tasks. This will be more disruptive than Generative AI. Roles that are heavily focused on digital coordination, data analysis, and process management are most likely to be transformed. The new key skill will be “managing” a team of AI agents and a-la-carte human experts.

- Q: What’s the difference between Agentic AI and “vibe-coding”?

- A: “Vibe-coding” (using natural language to generate code) is a Generative AI task. An AI Agent would use vibe-coding as one of its tools. You’d give the agent a goal: “This user reported a bug in our app.” The agent would then plan the fix, use vibe-coding to generate the new code, test it, and deploy it, all without you writing a single prompt for the code itself.

- Q: Is Agentic AI the same as AGI (Artificial General Intelligence)?

- A: No, but it’s a significant stepping stone. AGI is a hypothetical, future AI with human-level cognitive abilities across all domains. Agentic AI systems are still specialized. They can act autonomously, but only within the specific domains and with the specific tools they’ve been given. They demonstrate “agency” but not general “sentience.”